Mapping the Rise in State-Level AI Regulation in the US: September 2024 Review

- September 25, 2024

- Snippets

Practices & Technologies

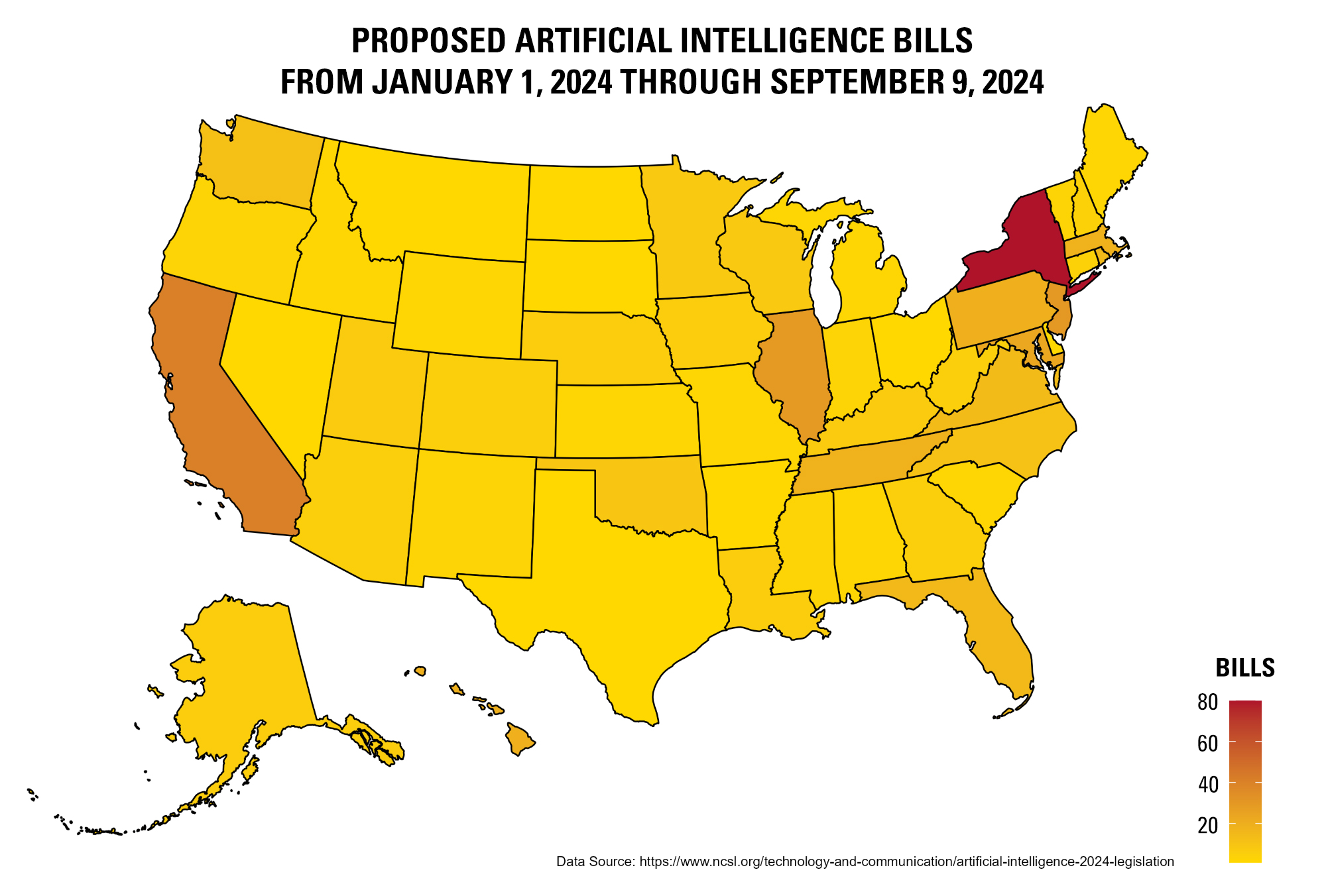

Artificial IntelligenceThis year has witnessed a rapid increase in legislation seeking to study and regulate machine learning and artificial intelligence (AI) models. So far in 2024, Congress has doubled the number of proposed bills seeking to study and regulate AI compared with 2023.[1] The jump has been even more dramatic on the state level. In 2023, legislators in 30 states, Puerto Rico and the District of Columbia proposed around 130 bills regarding machine learning and AI.[2] As of September 9, 2024, legislators in all 50 states, Puerto Rico, the US. Virgin Islands and the District of Columbia have proposed almost 500 bills.[3] These state-level bills are directed at topics including (i) regulating government use of AI models, (ii) regulating private sector use of AI models, (iii) conducting studies and impact assessment of AI models and (iv) regulating the use of AI models in elections.[4] We discuss each category of legislation below.

The first set of state-level bills concerns regulation of the use of AI models by state governmental entities such as law enforcement. Legislators across 35 states have proposed more than 150 such bills so far in 2024. To date, legislators have enacted 19 of these bills across 13 states. One recently enacted bill is Maryland S 818, which provides broad guardrails for governmental use of AI systems. This bill requires that state governmental entities that employ “high-risk artificial intelligence” must conduct regular impact assessments regarding such AI-based systems. Furthermore, state entities that become aware of harm to particular individuals due to use of the AI systems must provide notice to the entire class of negatively impacted individuals and offer an opportunity to opt-out. The term “high-risk artificial intelligence” is defined as AI that is “a risk to individuals or communities.” This includes AI whose output “serves as a basis [of a] decision or action that is significantly likely to affect civil rights, civil liberties, equal opportunities, access to critical resources[] or privacy” and AI that has “the potential to [impact] human life, well-being[] or critical infrastructure.” It is not yet clear how state agencies and/or citizens will interpret these provisions, but it appears that the governor’s office and/or agencies may have wide discretion regarding the extent of enforcement. Similar legislation across several states in this area indicate a trend in granting great power to the executive branch to restrict or allow continued development and use of AI models.

The second grouping of state-level bills involves regulating private sector use of AI models. Legislators in 29 states have proposed nearly 150 bills so far in 2024. Of these, legislators enacted 11 bills across 9 states. One bill of note is California AB 2013, recently sent to the governor, which requires companies, prior to the release or modification of an existing AI system made available to citizens of California, to make information about the system available to the public. This information includes high-level information about the data used to train the AI system, such as, the types of data used, whether personal information was used, what data cleaning and processing steps were used, the time period of data collection and the owners or sources of the training data. This bill is emblematic of a movement toward greater transparency regarding how AI systems are trained, how AI systems are used and disclosure of the use of AI, through the use of watermarking in AI-generated media.

The third grouping of state-level bills concerns the collection of studies and impact assessments regarding AI models. This grouping comprises bills mandating that private and/or public entities assess the impact of an AI system on a particular industry or a particular group as well as bills creating task forces or other groups for the purposes of oversight or advising the legislature regarding AI.[5] So far, 2024 has seen more than 70 bills across 29 states proposed to this end. One recently enacted bill is Indiana S 150, which created the “Artificial Intelligence Task Force” comprised of state legislators, academic professionals, legal experts and industry representatives. The bill directs the task force to study both AI technology developed, used or considered for use by state agencies and recommendations made by similar groups in other states on the benefits, risks and effects of such AI technology on the rights and interests of Indiana residents, such as legal rights and economic welfare. This bill foreshadows greater scrutiny of AI technologies and their impact on constituents by state legislatures in the near future.

The fourth set of bills directly regulates the use of AI systems in elections.[6] Bills in this category are directed to several efforts, including regulation of AI in the processing of election results. Additionally, this type of legislation may require disclosure of AI-based political advertising or social media content. Thus far in 2024, 24 states and Puerto Rico have proposed 52 such bills. One enacted bill from a swing state highlighting this trend is Wisconsin A 664. This bill mandates that audio and video communications regarding candidates, issues or referendums that include “synthetic media” must explicitly disclose this fact in the communication. The term “synthetic media” is defined as “audio or video content that is substantially produced in whole or in part by means of generative artificial intelligence.” Failure to provide such disclosures results in a fine per violation, though liability is limited to the creators of the communications and not third-party broadcasters or hosts. Such legislation verifies state legislators’ attempts to mitigate the rise of AI-generated content used in the present election campaign.[7]

To conclude, 2024 has already seen a substantive increase in the number of proposed and enacted bills directed at regulating AI. This trend is expected to continue as task forces, such as those created by Indiana S 150, present their findings to state legislatures. Diverse and evolving definitions of terms like “high-risk” AI across states, as in Maryland S 818, could influence other state and federal legislation on such technologies moving forward. These legislative efforts must adopt a dynamic approach to adequately address emerging challenges to support innovation, as Wisconsin A 664 and California AB 2013 attempt to do, while protecting public and private interests.

[1] https://www.brennancenter.org/our-work/research-reports/artificial-intelligence-legislation-tracker

[2] https://www.ncsl.org/technology-and-communication/artificial-intelligence-2023-legislation

[3] https://www.ncsl.org/technology-and-communication/artificial-intelligence-2024-legislation

[4] Id.

[5] Id.

[6] Another article that highlights state-level efforts to regulate the use of AI in elections is available here.

[7] Fake Taylor Swift Endorsement, Fake President Biden Calls, Fake Images of President Trump.